The temporal auditory imaging theory

The original publication is available here (also mirrored here) and is found in its dedicated webpage hearingtheory.org.

The informal summary from the original manuscript (pp. iv–x) is given below.

Vision and hearing

Out of the five traditional senses—vision, hearing, touch, taste, and smell—hearing and vision superficially share the most in common—something that has led to recurrent juxtapositions and comparisons over millennia. For start, the peripheral organs themselves, the eyes and the ears, are placed in proximity and at similar height on the human face, they both come in pairs, and both provide near-nonstop information from the distance about the immediate and remote environments. On top of that, both are central for communication and both are used expressively in many art forms.

As the understanding of the senses has matured over the last two centuries, additional characteristics have stood out, the main one being that both hearing and vision are based on physical wave stimuli that radiate toward the body—sound or light waves—albeit at very different characteristic speeds, wavelengths, and frequencies. With the advent of psychophysics, analogies between visual and auditory perception were made clear too, which occasionally turned out to have parallels in the respective structure of the relevant brain area or its presumed method of processing the stimuli.

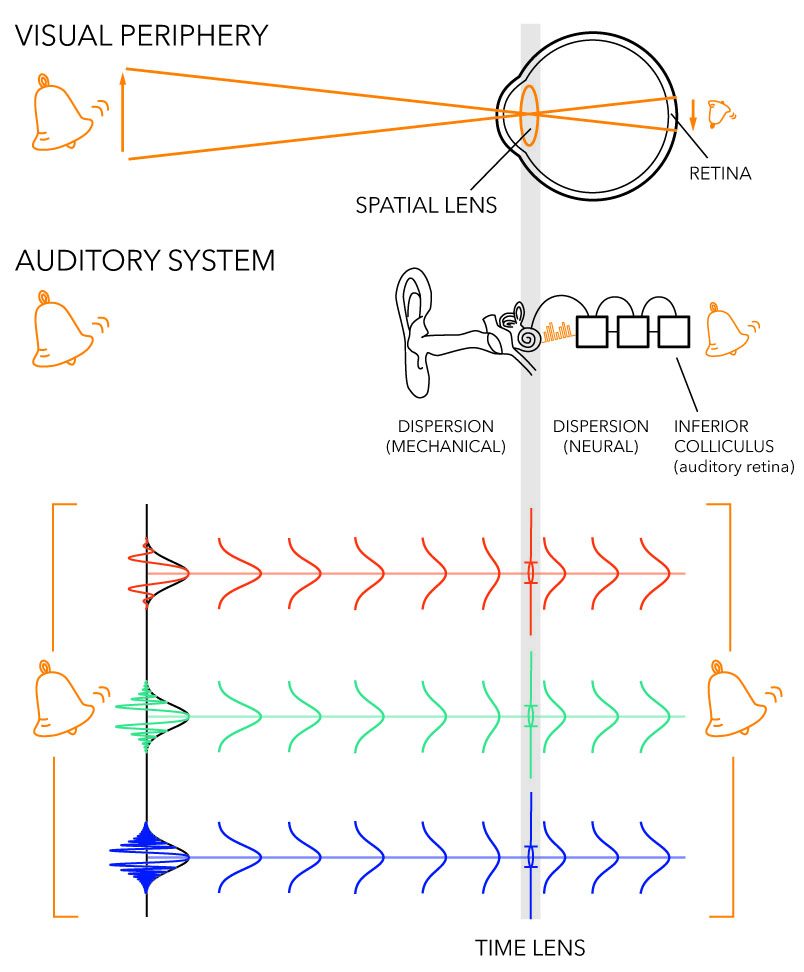

The human eye works like a camera, complete with an object, a lens, a pupil to limit the amount of light, and a screen that is the retina, on which an optical image appears upside down. Seeing the image simplifies the understanding of the eye, as it is intuitively clear that the ideal image is a demagnified replica of the object, which has to be as sharp and free of aberrations (various distortions in the two-dimensional image) as possible. The eye achieves focus using a variable focal length of its lens, which is controlled by accommodation. Once the image hits the retina, it is transduced by photoreceptors that also filter the light into three broad frequency ranges, which form the basis for color perception. After initial signal processing in the retina, a neural image is sent to the visual cortex through the optic nerve and through the thalamus.

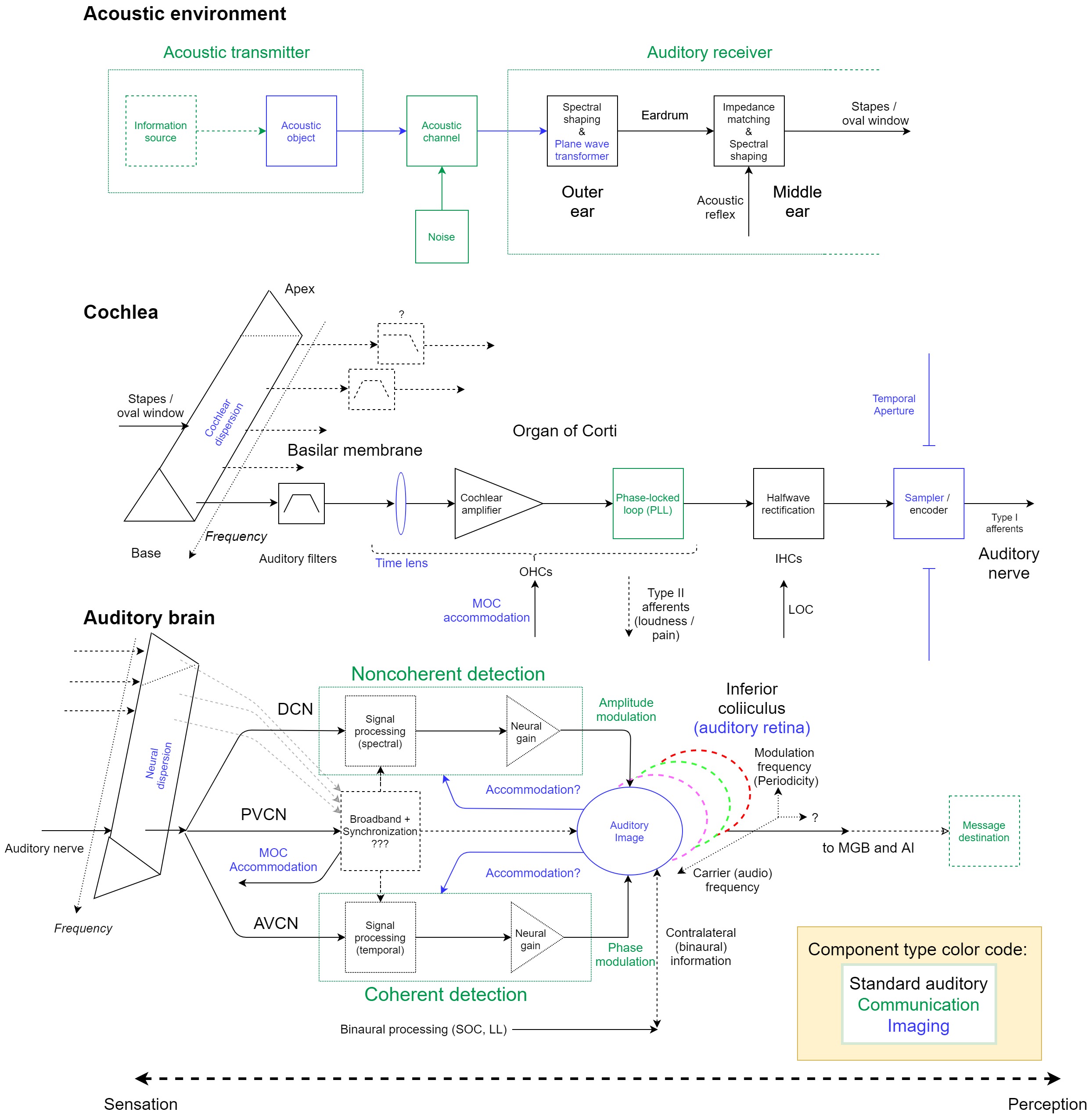

A quick inspection of the ear does not reveal an equally obvious mechanism of operation and certainly nothing that looks (or sounds) like a lens or an image, let alone a two-dimensional one. Optics does not apply here, so an intricate combination of principles from physics, engineering, and biology must be invoked to explain its operation. The ear also does not work as a tape recorder, which might be thought of as the associative analog to the camera for sound. It does not "record" the incoming sound as a broadband signal, let alone "play" it back as is. Rather, after a series of complex mechanical transformations, the organ of Corti in the cochlea filters the broadband sound into numerous narrowband auditory channels that are sometimes independent of each other, and yet interact in other cases, according to complex rules that must be uncovered through experiment. The sound is finally perceived as broadband, as though the input has been perceptually resynthesized, following signal processing in different brain areas related to the auditory system.

Hearing points

The present theory attempts to show how the principles of optical imaging apply to hearing notwithstanding. The recipe for doing so is straightforward as long as several tropes of hearing science are shed. For the sake of this summary, let us treat the following statements as correct. They will all be properly demonstrated and motivated in the main text:

1. Time is for hearing what space is for vision.

2. Wave physics does not stop at the auditory periphery.

3. Constant frequency is the exception, not the rule.

4. The ear is not a lowpass receiver, but rather a (multichannel) bandpass system.

5. Acoustic source coherence propagates in space according to the wave equation.

6. Information arriving to the auditory brain is discretized and the rules of sampling theory must apply.

Each one of these statements on its own may not be particularly novel or controversial, at least in some contexts of hearing theory, but once their totality is internalized, new ways to understand hearing inevitably arise.

Inferring the auditory image

The visual image is spatial, since it is distributed over the area of the retina, where a two-dimensional projection of three dimensional objects appears as a pattern of light. In imaging analysis, it is customary to "freeze" the progress of time at an arbitrary moment and look at a single still image, which contains much of the information from the object and its environment, all simultaneously available within same image. The passage of time entails movement of the object(s) and observer, which can be understood as incremental changes to the reference still image. In contradistinction, it is exceedingly difficult to make sense of a "frozen" image of sound—for example, a particular geometrical configuration of the traveling wave in the cochlea—which carries limited information about the auditory scene on the whole. Here, it is necessary to let time pass and hear how the different sounds develop and interact in order to be able to say something meaningful about the acoustic situation they represent and how they are distributed in space. Hence, we intuitively arrive at the space-time analogy between vision and hearing, which was epitomized in Point 1.

Original illustration by Jody Ghani

Further pushing the logic of Point 1 entails that if the visual image occurs in space, then a hypothetical auditory image must occur in time. Then, we should expect that just as the optical object-image pair in vision can be represented as a spatial envelope that propagates between points in space (e.g., between the object origin and the image origin), so should the acoustical object-image pair of hearing be representable by a temporal envelope, between reference points in time. Both object types should have a center frequency that carries the envelope, as there is no physical difference in the way that the information about the objects is borne by waves.

Another important substitution is to find the temporal equivalent of diffraction, which in optics determines how different parts of the light waves interfere and change their shape, as a result of scattering by various boundaries and objects on their path to the screen. Diffraction is really a general term for wave propagation from the object, which may or may not encounter scattering obstacles on its way to the screen. Switching between the spatial and temporal dimensions (Point 1), diffraction is replaced by group-velocity dispersion, in which the shape of the temporal envelope is impacted by differential changes to its constituent frequencies. We recall that the cochlea itself is inherently a dispersive path, so that the acoustical signal that arrives into the cochlea is automatically dispersed. Note that while we often talk about dispersion, we are actually concerned with its derivative—the group-velocity dispersion—that goes by different names, such as group-delay dispersion and phase curvature.

Next, if there is any chance for us to construct an imaging system within the auditory system, we should be also looking for a temporal aperture—something that limits the duration of signal that can be processed at one point in time (really, sampled) for a given chunk of acoustical input. Here, the neurons that transduce the inner hair cell motion produce spikes that are limited in time by definition, so there is a time window in effect that continuously truncates the signal into manageable chunks.

While the above steps are relatively simple endeavors, completing the identification of the temporal imaging system in the ear requires bolder steps—borderline speculative. First, we require an additional dispersive section in the auditory brain, regardless of the acoustical signal representation that is now fully neural (Point 2). Current science has it that there is no neural dispersion in the auditory brain, whereas the present work claims otherwise, as can be demonstrated by several measurements. While the precise magnitude of dispersion is difficult to ascertain, it is readily evident how no cochlear measurement of the group delay based on otoacoustic emissions has ever matched the auditory brainstem response measurements that include the brainstem as well. This discrepancy translates to a non-zero group-velocity dispersion of the path difference, which is mostly neural.

The second speculative step is to identify a lens. A temporal imaging system requires a "time lens", rather than a spatial lens, although it is not strictly necessary (this is because a pinhole camera does not have a lens, but still produces a sharp image, as long as the aperture is very small). A time lens performs the same mathematical operation as the spatial lens in the eye, only over one dimension of time instead of over two dimensions of space, and where frequency is a variable rather than a constant (Point 3). Indeed, an inspection of the dynamic properties of the organ of Corti that were recorded in several state-of-the-art physiological measurements reveals a phase dependence in time, frequency, and space that is symmetrical in shape. Such phase response can be readily attributed to a time lens, which is modeled using a quadratic phase function—a form of phase modulation.

Putting the system together

We have now identified the four necessary elements of a basic temporal imaging system in the ear: cochlear dispersion, cochlear time lens, temporal aperture, and neural dispersion. The values of the different elements can be roughly estimated for humans following an analysis of available physiological data from literature. These estimates can also be cross-validated using human psychoacoustic data from other sources.

Although we are dealing with sound, now we are in the conceptual realm of optics, which has devised a number of powerful analytical tools to characterize the image and objectively assess its quality. For example, it is possible to compute whether the above combination of elements produces a focused image (putatively, inside the brain)—a temporal envelope carried by a center frequency that is propagated to the midbrain or thereabout, where the "auditory retina resides". Surprisingly, the answer is a definite "no". The auditory image is defocused, unlike the optical image that appears on the retina in normal conditions. However, in vision, we know how information about the object is superior when the image is focused (for instance, try to read a blurred text from the distance).

Why should hearing be any different than vision and be defocused and not sharply focused? Why would we want to hear sounds that are blurry rather than sharp? To be able to answer these questions requires us to revisit the idea of group-velocity dispersion and establish its relevance to realistic acoustic signals. This will help us establish the meaning of sharpness and blur in hearing.

Spatial blur

In spatial imaging, there are two general domains of blur. In the geometrical one, light "rays" are traced following refraction. Every object can be thought of as a collection of point sources in a continuum, from which light rays diverge in all directions, each carries the information about the point it emanated from. The goal of imaging is to collect the rays so they form the same pattern of light in another region in space as they do at the object position. This generally includes a linear scaling factor—magnification—which does not have direct bearing on the fidelity of the image that is otherwise a one-to-one mapping of the object in space. Deviations from the one-to-one mapping in two dimensions—when the rays do not converge exactly where they should in order to reconstruct the object—are called aberrations. An out-of-focus imaging system has a "defocus" aberration, which entails a lack of convergence of the rays coming from different directions on the screen, so that information from different points of the object is "mixed" at the position of the image, in a way that is visible. The geometrical form of blur is the most dominant one when the wavelength of the light is much smaller than the object and also smaller than the different obstacles in the optical path to the image.

On the other extreme, when the wavelength of the light is comparable to the details of the object or the aperture, or other things that scatter the light on the way to the screen, then the effect of diffraction may be visible in the image as different interference patterns that can distort the details of the object. These may be thought of as diffraction blur, although the underlying mechanism is very different from geometrical blur. Effects here, if they are visible at all under normal conditions, tend to appear along edges and around very fine details of the image.

With these rough definitions in mind, we note that sharpness is simply the absence of visible blur, or blur that is quantified to be below a certain threshold.

Auditory blur and coherence

Back to hearing, how does the auditory image—really, the temporal envelope carried by high frequency—ever becomes blurred? First, we have to transform the two types of blur to temporally-relevant phenomena (invoking Point 1 again). An example of geometrical blur is relatively easy to see, since it manifests in reverberation. Here, the information from the source contained within a point in time (or rather, an infinitesimally short interval) arrives to the receiver mixed with information originating in other points in time. The mixing is asynchronous, so it adds up randomly and does not interfere. A corollary is that direct or free-field (anechoic) sound suffers from no geometrical blur at the input to the ear.

The spatial diffraction blur can be analogized to temporal dispersion blur. Group velocity dispersion entails that every component of the temporal envelope propagates at somewhat different velocity. Temporal obstacles in time (i.e., those that can be expressed as filters or time windows) that are approximately proportional to the period of the carrier wave may impose a differential amount of delay to the different components of the envelope spectrum. The result is similar to interference in time and is, therefore, an analogous form of blur of the temporal envelope to that of diffraction blur of the spatial envelope.

It appears that in order to know whether the different types of blur ever apply in reality, it is necessary to know how much bandwidth the acoustic source occupies. If the bandwidth is very narrow (with the extreme being that of a pure tone), then group-velocity dispersion is unlikely to have any effect, because only a single velocity is relevant per given frequency. However, as the bandwidth becomes wider, more and more frequencies are subjected to differential group-velocity dispersion, so the envelope may become blurry if the dispersion is high or if it is accumulated over large distances. But, there is an effective limit imposed on the bandwidth here, because the auditory system analyzes the acoustic signal in parallel bandpass filters, each with a finite bandwidth, that together cover the entire audio spectrum. Therefore, the maximum relevant bandwidth of a signal has to be related to the auditory filter in which it is being analyzed. Either way, it is realistic signals that are naturally modulated in frequency and do not have constant frequency (Point 3) that may experience the effects of group-velocity dispersion most strongly.

Another effect of the bandwidth that is related to geometrical blur has to do with the degree of randomness of the signal. Unlike deterministic signals, a truly random signal does not interfere with a delayed copy of itself. Therefore, the notion of geometric blur as in reverberation applies best to random signals that do not interfere, and only mix in energy without consideration of the signal phase. In general, the more random a signal is, the broader its bandwidth is going to be, since its amplitude and phase cannot point to one frequency at all times. This reasoning is best captured by the concept of (degree of) coherence (also called correlation in the context of hearing and acoustics). It describes the ability of a signal to interfere with itself. A completely random signal, such as white noise (broadband spectrum), does not interfere with itself and is considered incoherent. A deterministic signal, such as a pure tone, can interfere with itself and is considered coherent. Roughly translating these terms into more intuitive understanding, a coherent source sounds more tonal—like a melodic musical instrument—whereas an incoherent sound source is more like noise. However, the vast majority of real-world sounds are neither completely tonal nor are they completely noise-like, so they can be classified as partially coherent.

All in all, it appears that the auditory defocus is applied differentially to different types of signals. Coherent signals that are largely unaffected by dispersion, are also unaffected by defocus. In contrast, partially coherent signals are made more incoherent by defocus, whereas incoherent signals remain incoherent also after defocus.

The modulation transfer function

Returning to standard imaging theory, it should not come as a great surprise that the imaging process and quality differ depending on the kind of light that illuminates the object: coherence matters. This becomes immediately apparent when analyzing the spatial frequencies of the object, which make the spectrum of the spatial envelope that is being imaged. A major result in imaging optics is that whatever diffractive and geometrical blurring (or other aberration) effect beyond magnification (i.e., linear scaling) exist in the system, they can all be expressed through its "pupil function", which then leads to the derivation of the so-called modulation transfer functions (it is different for coherent and incoherent illumination).

In exact analogy to spatial optics, we obtain similar, degree-of-coherence-dependent modulation transfer functions in the temporal domain that incorporate the effects of dispersion in the aperture and blur due to defocus. Such functions are nothing new in hearing science, but so far they have been obtained only empirically without explanation for why the coherent and incoherent functions are different, whereas the present theory contains the first derivation of these functions from the basic principles of auditory imaging. Once these functions become available, all sorts of predictions may be offered to explain different auditory effects that have been also measured only empirically until now.

The theoretical modulation transfer functions that have been obtained here are only good as first approximation and there are clear discrepancies from experiment in several cases. It is argued that a major reason for the discrepancy is the discrete nature of the transduction. Irregular spiking in the auditory nerve further downstream in the auditory system is tantamount to repeatedly sampling the original signal at nonuniform intervals, which tends to degrade the possible image at the output of the system. In very subtle contexts it may also create perceivable artifacts, which are not captured by the analytically derived (continuous) modulation transfer function (Point 6).

Coherence conservation and the phase locked loop

It is necessary to take a small detour in the auditory imaging account and introduce another element to the discussion that is borrowed from communication and control theories. In the brief mention of coherent signals above we sidestepped an important question: while we know that coherence propagates in space according to the wave equation (Point 5), do we also know for certain that it is conserved in the ear? Notably, does the transduction between mechanical to neural information conserve the degree of coherence of the original signal? A big clue seems to suggest that the answer is yes: signals are known to phase lock in the auditory nerve, following transduction by the inner hair cells. This applies to coherent (tonal) signals, and to a lesser degree to other signals, where incoherent signals only lock to the slow envelope phase and not to the random carrier. Phase locking to coherent signals is special in hearing compared to vision, where the phase of the light wave changes too rapidly to be tracked by a biological system.

Phase locking is a hallmark of coherent reception—a form of information transfer in communication theory, which tracks the minute variations in the phase of the (complex) temporal envelope of a signal. Realizing this form of reception requires an oscillator, which is an active and nonlinear component within the receiver. (In the complementary noncoherent reception, which only tracks the slowly-varying envelope magnitude, an oscillator is not strictly necessary.) A closer inspection of the cochlear mechanics and transduction suggests that phase locking first emerges at the cochlea, before it becomes manifest in the auditory nerve. Therefore, it seems reasonable to look for the components of a classical coherent detector (Point 4) that provides phase locking, i.e, a phase-locked loop (PLL). For a PLL to be constructed, we require a phase detector, a filter, an oscillator, and a feedback loop that returns the output to the phase detector. All these components can be identified within the organ of Corti and the outer hair cells.

Conventional theory holds that the outer hair cells perform cycle-by-cycle amplification for the incoming signal, but theory and experiment are still in disagreement as for how this process exactly works. The PLL model does not necessarily clash with this standard amplification model, and might even interact with it, by incorporating amplification into its own feedback loop. A similar argument may be made about the time lens and the PLL—their function may not necessarily be in conflict.

As it currently appears in the main text, the auditory PLL model is strictly qualitative and, accordingly, speculative. However, it does provide the missing link for coherence conservation between the outside world and the brain—a link that has been glossed over until now and is critical for the understanding of how the auditory system handles different kinds of stimuli according to their degree of coherence.

Auditory imaging concepts

With the imaging system specified, we can now turn to explore some of the hallmark concepts of imaging theory and apply them to hearing, beyond those of auditory sharpness and blur.

First, we calculate the temporal resolving power between two pulses—analogous to the resolving power between two object points, as is mandatory information in telescopy, for example—using the estimated temporal modulation transfer function. The predictions are comparable to empirical findings from relevant studies, especially at the 1000–8000 Hz range.

We also elaborate on the auditory analogs to monochromatic and polychromatic images. It is argued that the various pitch types can be thought of as the quintessential monochromatic image (pure tone, unresolved complex tone) or polychromatic image (resolved complex tone, interrupted pitch)—all of which highlight different periodicities in the acoustic object.

This understanding can then be used to hypothesize deviations from perfect imaging—various monochromatic and polychromatic aberrations. Monochromatic aberrations relate to the variation of the group delay within the auditory channel, whereas polychromatic aberrations to variations between channels. Several examples are given to the different types, based on known phenomena from the psychoacoustic literature, as well as a new effect.

Finally, we can hypothesize about the auditory depth of field that is temporal rather than spatial. It should be most clearly observable between objects of different degree of coherence. For example, it is argued that forward masking can be readily recast as small depth of field, in the case of incoherent (broadband noise) masker and coherent (pure tone) probe, since the boundary between them is effectively blurred as a result of forward masking. However, when the masker and probe are of the same type (e.g., incoherent and incoherent), then the depth of field is large, as the forward masking becomes longer.

Auditory accommodation and impairments

An even bigger leap in applying the analogy between optical / visual imaging and acoustical / auditory imaging is the search for auditory accommodation. In vision, accommodation is an unconscious mechanical process that varies the focal length of the lens to match the distance of the object, so to bring its image to sharp focus on the retina for arbitrary distance of the object. Accommodation involves several ocular muscles that are fed by an efferent nerve from the midbrain, which together with the output from the retina form a feedback loop.

We have already stated that the ear is defocused, so what could possibly be the use of auditory accommodation in this context? One attractive answer is to control the degree of coherence that enters the neural system, which as was argued above, is captured by the degree of phase locking. This is interesting because of much converging empirical evidence that shows how the hearing system can process sound either according to its slowly-varying temporal envelope (its magnitude), or using phase-locking to track the fast variation of the carrier phase (the so-called temporal fine structure of the stimulus). The difference between the two processing schemes runs throughout the very physiology of the auditory brainstem, which appears to have dedicated parts for each type of processing. Coming in full circle, this differentiation is akin to the types of imaging that exist in optics: coherent, incoherent, or a mixture of the two—partially coherent. It is also akin to the detection schemes that are used in standard communication engineering: either coherent or noncoherent. Applying a variable stage in coherence processing may be achieved, for example, by the medial olivocochlear reflex—an efferent nerve that innervates the outer hair cells and whose function is not well understood. But additional mechanisms to achieve the same function may exist. Once again, this involves considerable speculation at present, but evidence to support this and related possibilities does exist and is discussed in depth in the main text.

Although there are many uncertainties about the specifics of this system, the penultimate chapter is dedicated for hypothesizing what happens when things go wrong: what is the effect of faulty imaging that may translate into hearing impairments? There are several possible answers here, with dysfunction in hypothetical auditory accommodation being the most attractive candidate. Nevertheless, evidence here is difficult to gather and much work has to be done to uncover the basics before turning to these more challenging, yet important, questions.